In a recent project I have had the opportunity to work with MongoDB, admittedly this is the first real attempt to do so in any real capacity.

For this project we created a number of Drupal websites with a centralised Data Object Store, referred to simply as the “GDO” (Global Data Object Store).

The main concept of the GDO is that data objects (in the form of JSON documents) can be stored centrally and accessed by every site/server within the AWS VPC (Virtual Private Cloud). These objects are delivered through a RESTful API sitting in front of the MongoDB.

The Problem

During the initial development of the GDO we were working on a Single EC2 instance, this was both the API and the MongoDB. During development this is fine however in production this becomes a single point of failure.

The Solution

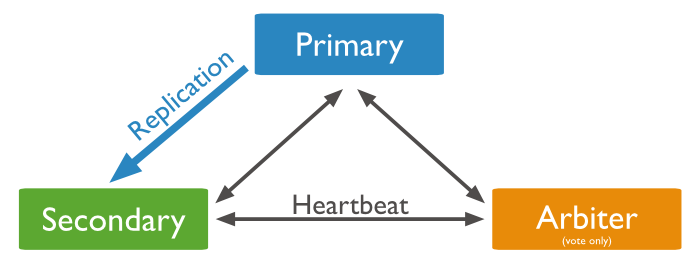

The first, key point to address was to make sure that the MongoDB is replicated. As this is a production environment some form of fault tolerance is required to deliver a good uptime. Budget in this project was a bit tight so we went for the absolute bare essentials to deliver better fault tolerance.

MongoDB’s minimum solution for this is to have 1 PRIMARY and 1 SECONDARY as part of a replica set, each in different Availability Zones. As we had an even number of nodes in our replica set cluster it is necessary for there to be an ARBITER else no quorum would be met.

Source: MongoDB.org

Source: MongoDB.org

In a nutshell the replica set is easy to set up - simply start up the primary and secondary nodes with the following added to the mongod.conf file:

replication:

replSet: rs0

Once you have started the MongoDB servers up, on your first node simply log in to the database server and run:

rs.initiate()

Then proceed to add the remaining nodes with:

rs.add("server.ip.or.url:27017")

If you have an even number of nodes then you will need to sort out creating an arbiter. This is merely a small EC2 instance that elects the primary in the cluster.

After addressing the issue of the replica set the next stage was to look at the API to interact with the MongoDB. This needed separating from the MongoDB server as it would need to connect to the replica set and interact with the elected Primary. The API also needed some resilience adding so we created two new EC2s sitting in different Availability Zones.

All traffic to the GDO APIs is over HTTPS (TLS) and internal to the VPC. For this Amazon provides a fantastic Load Balancing service called “Elastic Load Balancer” (ELB). The ELB provides health checks and lets us seamlessly balance the load between the two API nodes. We can also very quickly scale up to include more nodes as time goes on with no huge changes to the infrastructure.

So, what did we end up with?

On the left we have the original, initial GDO store - a single (huge) monolithic EC2 instance. As you can see it was a single point of failure in itself. What we have on the right is a load balanced, cross availability zone Global Data Object Store that is a lot more fault tolerant with room to scale up and/or out.

What is more is that the only major change we had to make to the codebase for the GDO APIs is the connection string, we had to tell PHP that we are connecting to a Replica Set. A sample configuration is as below:

<?php

/*

* Sample MongoClient object for MongoDB Replica Set

*/

$m = new MongoClient("mongodb://db1.example.com:27017,mongodb://db2.example.com:27017", array('replicaSet' => 'rs0', 'username' => 'someuser', 'password' => 'sTr0Ngp4ss!'));

Future Considerations

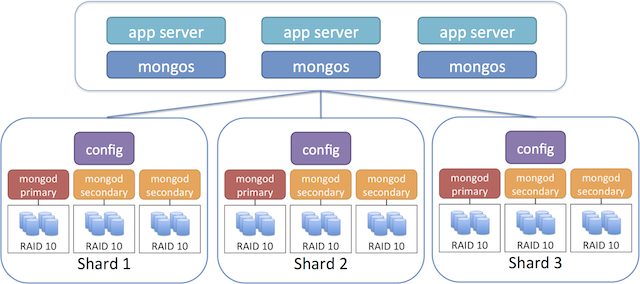

In a perfect world where money was no object we would have gone for a sharded replica set to improve performance. Ideally with RAID 10 Elastic Block Storage (EBS) volumes with provisioned IOPS on each node (see below diagram). This would work even better cross-region so short that short of Amazon Web Services completely being wiped of the map or the end of society itself, the GDO would be almost bulletproof. Perhaps one day the opportunity will arrive for something along those lines.

Source: MongoDB Docs

Source: MongoDB Docs