I thought I would write up my experiences with setting up Ghost served over HTTPS as it’s quite a good way of jumping into Docker and using nginx as a reverse proxy to route traffic between Docker applications.

In this tutorial we will use the following applications:

- Debian (or other Linux)

- nginx (Web Server, Reverse Proxy and Load Balancer)

- Certbot (client for Let’s Encrypt certificates)

- Docker (containerization software)

- Docker-Compose (tool for defining multi-container environments, useful for single containers too!)

- Ghost (a nice weblog tool written in node.js).

Eventually we will have something like the below:

Prerequisites

I’m assuming you already have a Linux machine, be it a dedicated server, a VM, an EC2 or a Droplet.

Your first step is to go grab Docker and install it.

Make sure you also have a cup of coffee, you may need a reboot.

Also, install docker-compose. If you run Python and have pip installed then do the following:

pip install docker-compose

Also, don’t forget to have your regular user as a Docker user, that way we aren’t running as root:

usermod -aG docker [username]

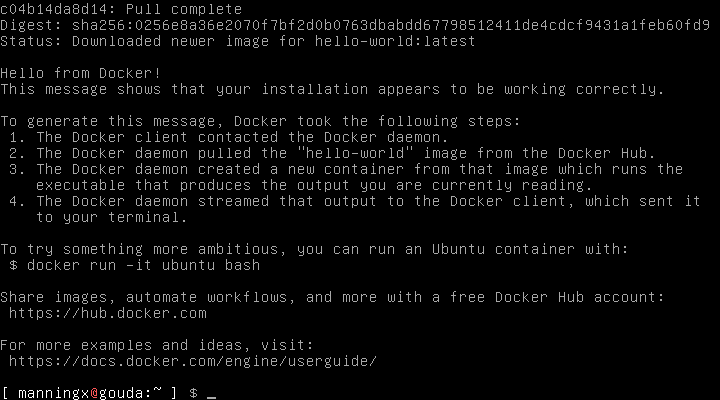

You can check your install with the hello-world image by running:

docker run hello-world

You will see the following on successful installation.

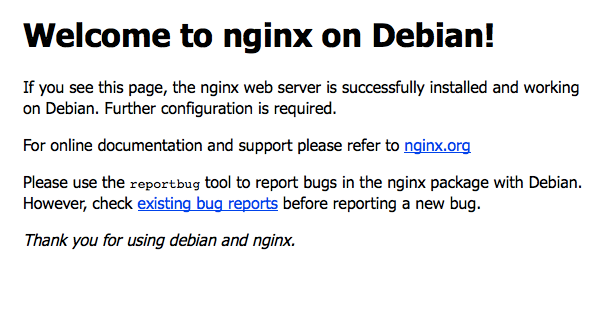

When you are done with Docker, I would install nginx. In this tutorial we are running this from the package manger. On Debian/Ubuntu:

sudo apt-get install nginx

Check nginx is installed and running by visiting http://your.ip.or.hostname/ - you should get a stock “Welcome to Nginx” page:

Set up our project

Create a new directory, this will host our Ghost project in Docker. We basically need somewhere to store our config files, our environment files and somewhere we can basically backup to somewhere like S3.

mkdir -p /var/www/docker/ghostblog/config/

cd /var/www/docker/ghostblog/

Creating a Docker-Compose file.

As mentioned we are using Docker-Compose to organise our Docker containers. In order to use Docker-Compose we need a file called docker-compose.yml.

vim docker-compose.yml

Below is an example that I feel works well.

ghost:

image: ghost:latest

restart: always

ports:

- 2368:2368

volumes:

- "./config:/var/lib/ghost"

env_file: ./config/site.env

So, let’s walk through the YAML. We have defined our first container (by name) as ghost:, this is pulling the image: ghost:latest from hub.docker.com. This is basically like pulling from a git repository. Just like pulling from a git repository you can also pull tags, however, in order to get the latest security updates for Ghost we are going to pull from ‘latest’.

We also want the container to restart: always whenever the node.js process dies. This helps us recover from any fatal errors as best we can - bascially the container should only stop when we tell it to.

Next we are setting up the networking. This defines which ports we want open and how they map onto the container’s ports. This is done using ports: and for each item in the list we use - host:container as the format. Reading the documentation on the Ghost container I know that port 2368 is used as the listening port. For the sake of this configuration I am going to open the same port up on the host.

Just a reminder to anyone using UFW, remember that Docker is (by default) allowed to manage iptables and these changes will not show in UFW. This can be fixed by changing the startup options of your Docker Daemon. Guide here.

Next, volumes: helps us to mount a directory on the host to a directory within the container. Only certain directories (defined in the original Dockerfile) can be mounted on the host, in the Ghost container this is /var/lib/ghost which is our configuration directory. To mount a volume, again it is a list item in the config that is formatted like so: - /path/on/host:/path/in/container. In this case we want to map a directory with the relative path ./config

Finally, we are going to pass environmental values in a file (env_file:) called ./config/site.env.

And we are done with Docker for now.

Creating the .env file

The .env file in our config directory will control the environment we are working in. In node.js you can run in a production environment or a development environment - these mean separate databases so you don’t contaminate your blog during development.

vim config/site.env

Our file is going to start off like this:

# Environmental Variables

# NODE_ENV=production

We have commented out NODE_ENV=production for now so that when Ghost first starts up it is in Development mode.

Let’s fire up the container.

To start the container, run:

docker-compose up

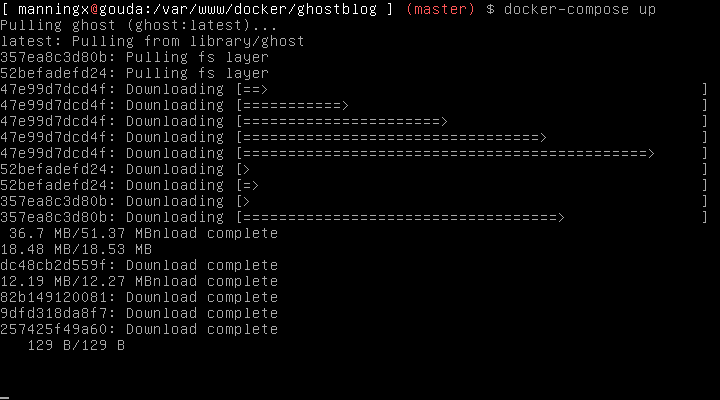

This will load up the container and you will see firstly a pull of the Ghost image from Docker Hub:

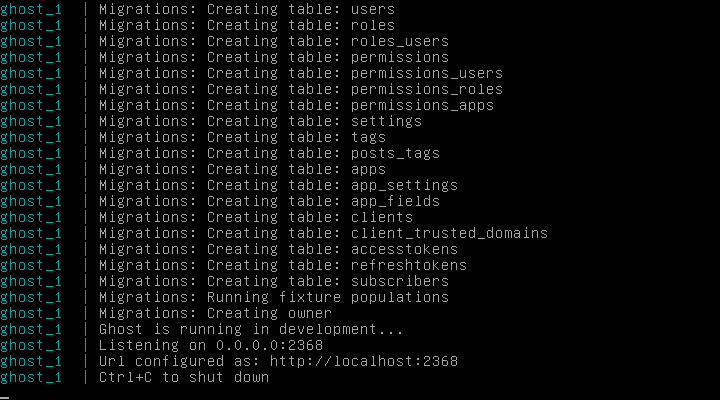

Then the output from the Ghost container:

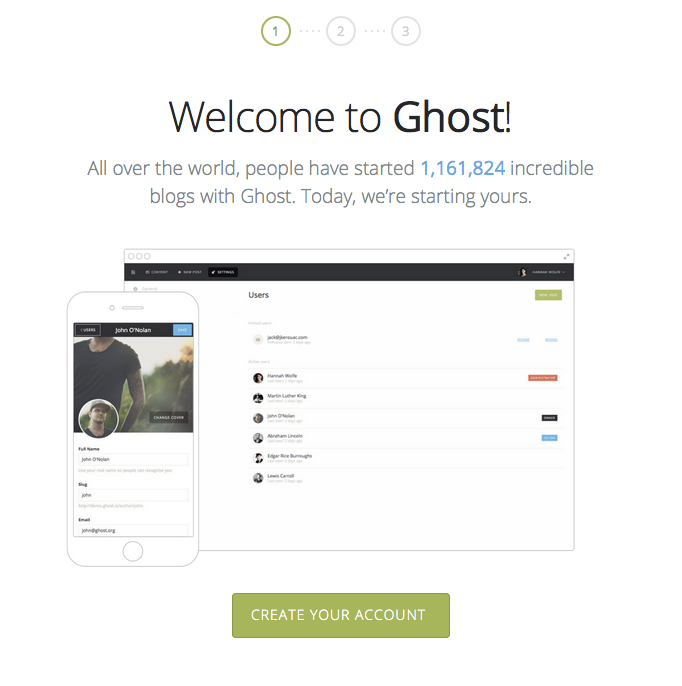

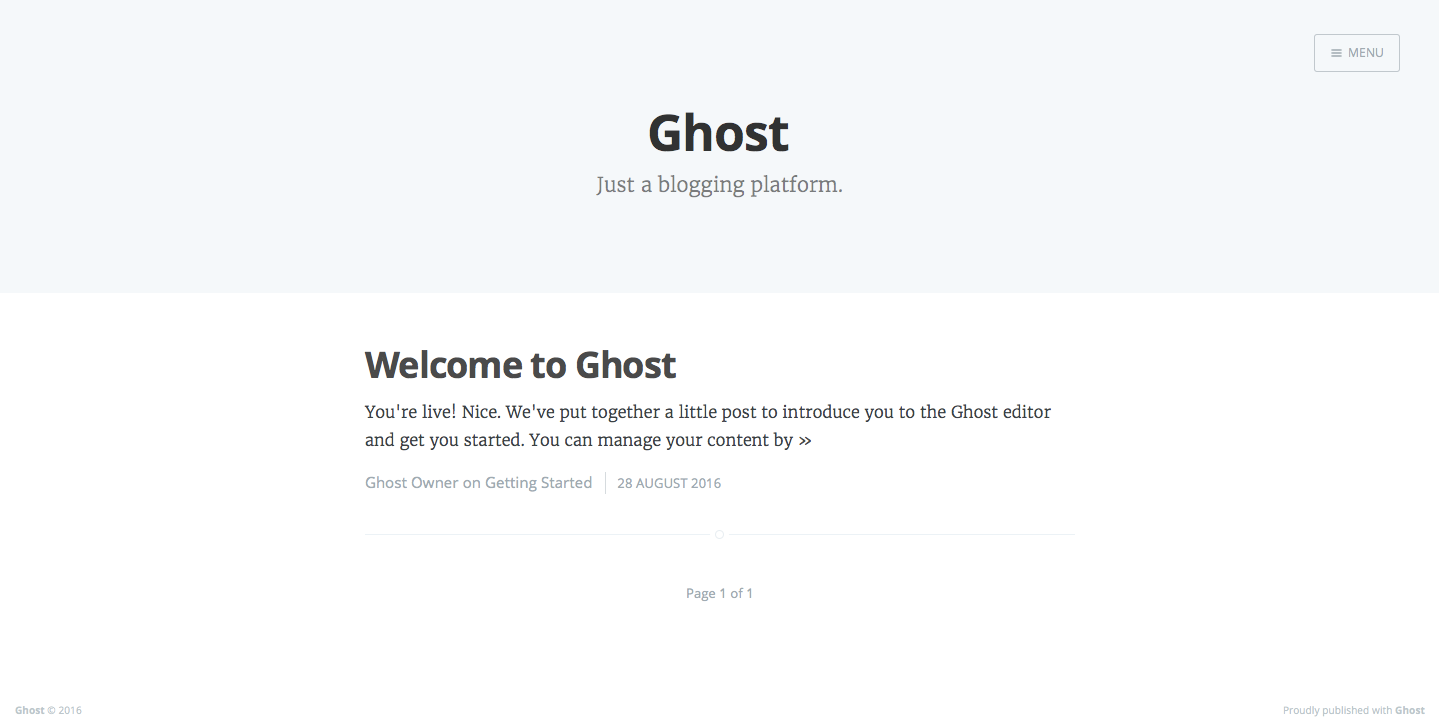

You can now go visit your blog by browsing to http://your.ip.or.hostname:2368/ You should see the welcome blog page:

Next, use Ctrl+C to stop the Ghost container.

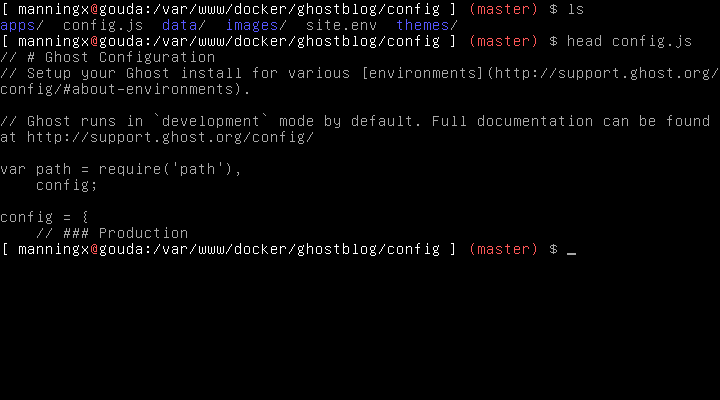

Configure your Ghost Blog

If you now change directory to the ./config directory, you will notice that it has a lot more files and directories in there. These have been created by the Ghost Docker container. In this directory is a new file called config.js - this file is used for configuring your Ghost blog. Here we are going to define development and production environment configurations.

vim config/config.js

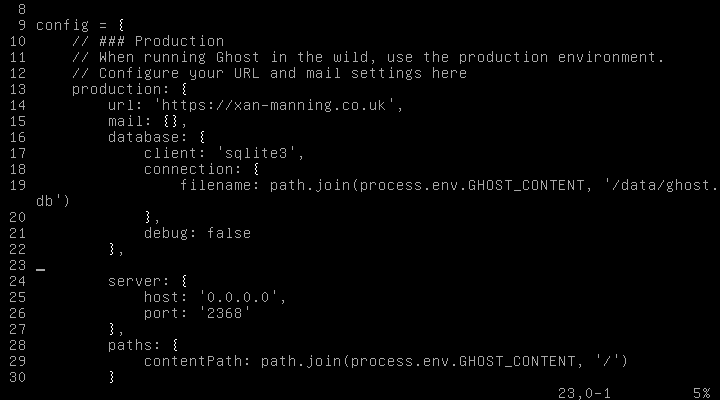

Firstly we shall configure the production environment. In the config = {} object we have a section production: {}, - here there is a variable called url:, you need to change this to the production URL that you will access your blog on. By default this is set to ‘http://my-ghost-blog.com’.

We will be setting this to be served over HTTPS so we need to set it accordingly:

url: 'https://xan-manning.co.uk',

There is also a slight bug in the production config that will cause you some problems later on, so best patch this now. After the server: {}, section, paste the following:

paths: {

contentPath: path.join(process.env.GHOST_CONTENT, '/')

}

Make sure you have a , (comma) after the last } in for the server: section.

Mine looks like this:

Now let’s get ready to go live. Edit the site.env file.

vim config/site.env

We now want to uncomment the production environmental variable as below:

# Environmental Variables

NODE_ENV=production

Setting up nginx with HTTPS using Certbot

For best practice we are going to serve our site over HTTPS. To do this we are going to use Certbot provided by the Electronic Frontier Foundation (EFF). This is a simple, Python based client created to issue certificates from Let’s Encrypt.

Installing Certbot

Visit the Certbot website and choose your installation. Remember you are using nginx in this configuration.

Setting up nginx

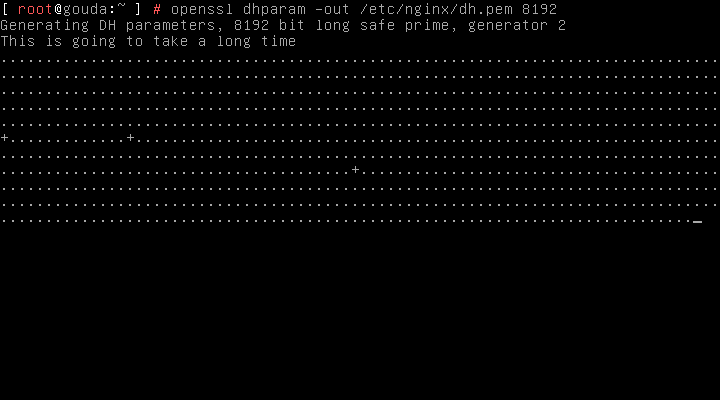

First off, let’s make our Diffie-Hellmann param file:

openssl dhparam -out /etc/nginx/dh.pem 8192

Grab another coffee, this takes a while.

We’re now going to add the following to our default vhost. This will allow us to create our first certificate.

vim /etc/nginx/sites-available/default

Under the location / {} block insert the following:

location ~ /\.well-known\/acme-challenge {

root /var/www/html;

allow all;

}

Restart nginx

systemctl restart nginx

Now we’re going to create a vhost to serve our Ghost blog. In /etc/nginx/sites-available create your new config file.

vim /etc/nginx/sites-available/xan-manning.co.uk

In here we need to define two blocks. The first will define our HTTP server running on port 80 and our second block running HTTPS on port 443. We won’t be doing proxy pass on port 80, merely serving a 301 redirect to the HTTPS.

Below is a simple config, remember to edit with your own URLs.

server {

listen 80;

listen [::]:80;

server_name xan-manning.co.uk www.xan-manning.co.uk;

location ~ /\.well-known\/acme-challenge {

root /var/www/html;

allow all;

}

location / {

return 301 https://$server_name$request_uri;

}

}

server {

listen 443 ssl spdy;

listen [::]:443 ssl spdy;

client_max_body_size 20M;

ssl_certificate /etc/letsencrypt/live/xan-manning.co.uk/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/xan-manning.co.uk/privkey.pem;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers "ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS"; #Disables all weak ciphers

ssl_dhparam /etc/nginx/dh.pem;

ssl_prefer_server_ciphers on;

ssl_session_timeout 30m;

ssl_session_cache shared:SSL:10m;

ssl_stapling on;

resolver 8.8.8.8;

ssl_stapling_verify on;

add_header Strict-Transport-Security max-age=31536000;

server_name xan-manning.co.uk www.xan-manning.co.uk;

location / {

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Proxy "";

proxy_pass http://localhost:2368;

}

location ~ /\.well-known\/acme-challenge {

root /var/www/html;

allow all;

}

location ~ /\.ht {

deny all;

}

}

The main parts of the above config we need to explain are that plain old HTTP is being 301 redirected to HTTPS (443) so this is a small block.

In the HTTPS block we have to define our certificate paths, remember to change these to your own paths. Below this is a fairly standard TLS configuration.

We then have 2 main location blocks. location / {} is used to define our proxy pass. All traffic visiting our webserver is going to be passed to our Ghost Docker container on port 2368 (http://localhost:2368).

Next we need to define our block for the acme-challenge for our certificate. This is done in the location ~ /\.well-known\/acme-challenge {} block. Here we are allowing all to visit this page. This will allow for validation of certificates. We are defining this as our shared webroot /var/www/html. Configure this however you want but remember this value for later on.

Generate our first certificate!

Before we enable the vhost in nginx, we need to create our first certificate. This has been prepared for in our default config. Remember to use your own domain.

certbot certonly --webroot -w /var/www/html -d xan-manning.co.uk -d www.xan-manning.co.uk

Once you have generated your certificate it’s time to enable the nginx config.

ln -s /etc/nginx/sites-available/xan-manning.co.uk /etc/nginx/sites-enables/xan-manning.co.uk

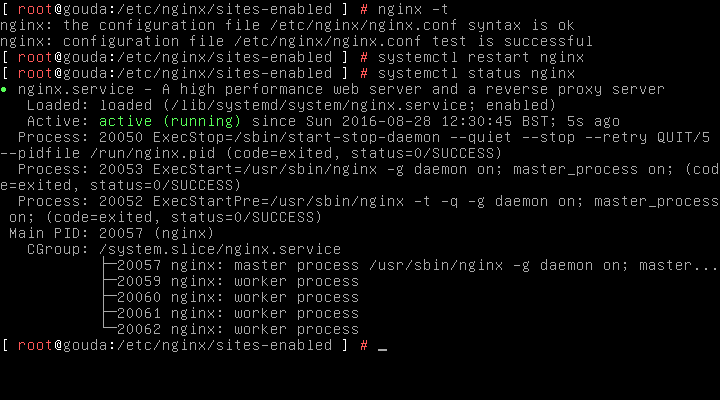

Run an nginx config test for good measure

nginx -t

If all is good, restart nginx!

systemctl restart nginx

Now, when you visit your new site you will see a 502 Bad Gateway error. Don’t panic. This is meant to happen! This means we haven’t started our Ghost container.

So what now? Easy…

Launch our production Ghost Blog

Go to our Ghost container webroot.

cd /var/www/docker/ghostblog/

Now we start up our Docker Container with docker-compose like so. This time we are demonizing it so we need to use the -d argument.

docker-compose up -d

Et voilla! Visit your blog on HTTPS and go to the setup page (https://your.ip.or.hostname/ghost).