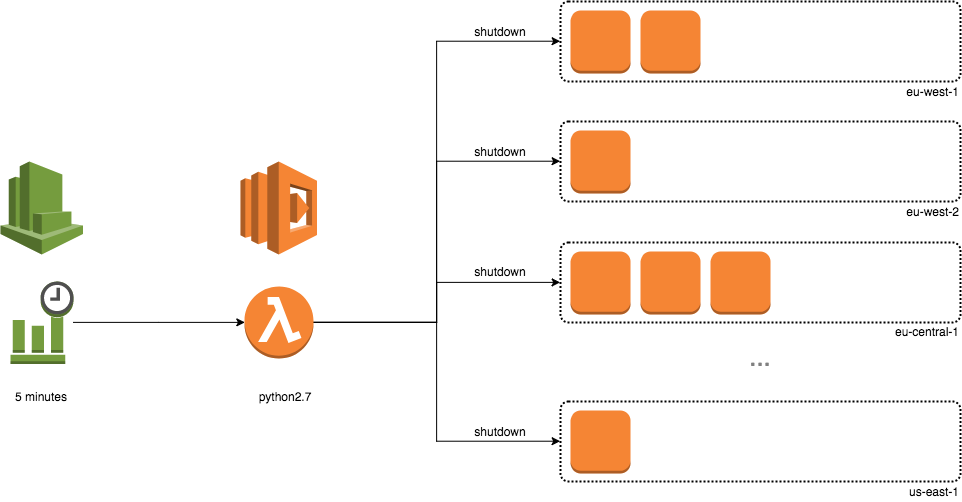

Today we are looking at how to automatically shut down EC2 instances at the end of the day automatically using a combination of Lambda and CloudWatch.

TL;DR I’ve written a ‘stop EC2’ Lambda function that is deployable with Terraform to get you started, I’ve not made a ‘start EC2’ Lambda function yet. Future improvements, I’d like to programmatically schedule instances using tags. Clone from: github.com/xanmanning/terraform-autostop

What we will end up with is a simple Lambda function that will search all of

our regions and automatically send the stop-instances command to anything

that is still running after 8:00pm.

To do this I will also be using Terraform as the process is then repeatable and can be versioned in git. I am fairly new to Terraform but I honestly cannot understand how I ever survived without it.

I’ve been asked on numerous occasions, at different employers “How do I stop (and start) Amazon EC2 instances at regular intervals?”, and I stumbled upon an article written by AWS that uses Lambda.

Now this knowledge base article is quite basic, you effectively write two Lambda functions:

- Shut down a specific instance

- Start a specific instance

You then use the time based event in CloudWatch to trigger this Lambda function. It’s kind of like writing a Python script on your VM and triggering it in cron - although you obviously cannot power on that machine with cron. I started looking at other solutions and came across this guide December 2016 when exploring turning development EC2 instances of an API platform off and on to a schedule:

EC2 Scheduler Implementation Guide

That’s great, but with a need to use a DynamoDB table it felt over engineered for what we needed at the time. What I wanted was something in the middle of these two solutions - scheduling tagged instances without a database.

Sadly, I was not able to build a solution due to a need to change employment.

Fast forward to November 2017 and I have been asked again for my thoughts on

using Lambda functions for scheduling EC2s. For my own proof of concept I wanted

to programmatically look for EC2 instances in any AWS region that is tagged for

automatic shutdown. For an EC2 to be automatically shut down at the end of the

day (8:00pm) the EC2 requires the tag AutoShutdown: True. Below is the

Python 2.7 Lambda function:

#!/usr/bin/env python2

#

# Autostop EC2 Lambda POC script.

# xmanning - 2017

#

from __future__ import print_function

import boto3

from datetime import datetime

# Poweroff time (24h format)

poweroff_time = 2000

# Set to false if we aren't debugging on a local machine.

local_debug = False

# StopEC2 Object

class StopEC2:

# initialize required EC2 client and configuration

def __init__(self):

self.init_client = boto3.client('ec2')

self.ec2_client = {}

self.instance_stop_list = {}

self.now = datetime.now()

self.time = int(self.now.strftime('%H%M'))

# Return a list of regions

def list_regions(self):

regions = []

for region_info in self.init_client.describe_regions()['Regions']:

regions.append(region_info['RegionName'])

return regions

def create_regions_ec2_clients(self):

for region in self.list_regions():

self.instance_stop_list[region] = []

self.ec2_client[region] = boto3.client('ec2', region_name=region)

def destroy_regions_ec2_clients(self):

for region in self.list_regions():

self.ec2_client[region] = None

def list_ec2s_per_region(self):

print('================================================')

print(' Scanning Regions for stoppable EC2 instances ')

print('================================================')

print('')

print('Key:')

print(' + EC2s with AutoStop Tag')

print(' - EC2s to be ignored')

print('')

self.create_regions_ec2_clients()

for region in self.list_regions():

print('------------------------------------------------')

print('EC2s running in {}'.format(region))

print('------------------------------------------------')

for reservation in self.ec2_client[region].describe_instances()['Reservations']:

for instance in reservation['Instances']:

if instance['State']['Name'] == 'running':

name = "No Name"

autostop = '-'

if 'Tags' in instance:

for tag in instance['Tags']:

if tag['Key'] == 'Name':

name = tag['Value']

if tag['Key'] == 'AutoStop' and tag['Value'] == 'True':

autostop = '+'

self.instance_stop_list[region].append(instance['InstanceId'])

print(' {} {} ({})'.format(autostop, instance['InstanceId'], name))

print('')

print('Done')

print('')

def stop_instances(self):

print('')

print('================================================')

print(' Running Stop Proceedure!')

print('================================================')

print('')

for region in self.list_regions():

if len(self.instance_stop_list[region]) > 0:

print('------------------------------------------------')

print('Stopping: {}'.format(self.instance_stop_list[region]))

print('------------------------------------------------')

self.ec2_client[region].stop_instances(InstanceIds=self.instance_stop_list[region])

print('Done')

self.destroy_regions_ec2_clients()

def lambda_handler(event, context):

client = StopEC2()

if client.time >= poweroff_time:

client.list_ec2s_per_region()

client.stop_instances()

else:

print('It is not power off time! (>={})'.format(poweroff_time))

if __name__ == '__main__' and local_debug == True:

lambda_handler([],[])

Now as mentioned, I like to use Terraform for managing my AWS infrastructure. It means I can spin up and tear down all my musings at a moments notice. There is one thing to note with AWS Lambda and Terraform, loading a function is not quite as straight forward as pointing your Terraform config to a .py file. You either have to upload the script to an S3 bucket or archive it into a .zip file to which you need to work out the sha256 hash of that file. Luckily Terraform will work out this hash but creating/updating the .zip file is a bit of a pain.

resource "aws_lambda_function" "stop_ec2_instances" {

filename = ".lambda_stop_ec2_payload.zip"

function_name = "stop_ec2_instances"

description = "Shuts down unused EC2 instances."

role = "${aws_iam_role.lambda_start_stop_ec2.arn}"

handler = "stop_ec2.lambda_handler"

source_code_hash = "${base64sha256(file(".lambda_stop_ec2_payload.zip"))}"

runtime = "python2.7"

timeout = 180

}

For this I have created a simple bash script that wraps around the Terraform

binary. I recommend this as it will speed up your workflow for deploying to

Lambda. My bash script works by firstly compressing the Python scripts in the

payloads/ directory and then runs the terraform plan/apply functions -

on the fly generating the .zip files and the associated has for upload to

AWS Lambda.

build_payloads() {

info "Building payload files."

local __PWD=$(pwd)

cd payloads

for file in * ; do

local script="${file%.*}"

info "Adding ${file} to .lambda_${script}_payload.zip"

zip -r9 "../.lambda_${script}_payload.zip" "${file}" || \

fatal "Could not build payload files"

done

cd "${__PWD}"

}

Feel free to clone my repository and play with the Lambda function.

https://github.com/xanmanning/terraform-autostop

Future improvements

- Change the tag

AutoShutdown: Trueto bePowerSchedule: 0800;2000(08:00am - 20:00pm) - Create a Start function that also reads the

PowerScheduletag. - Have the option for a ‘default’ schedule for untagged instances.

- Exempt EC2s for Stop/Start with a specific tags, eg.

Production - Add Paramiko support for running commands after EC2 start or before EC2 stop.